Introduction

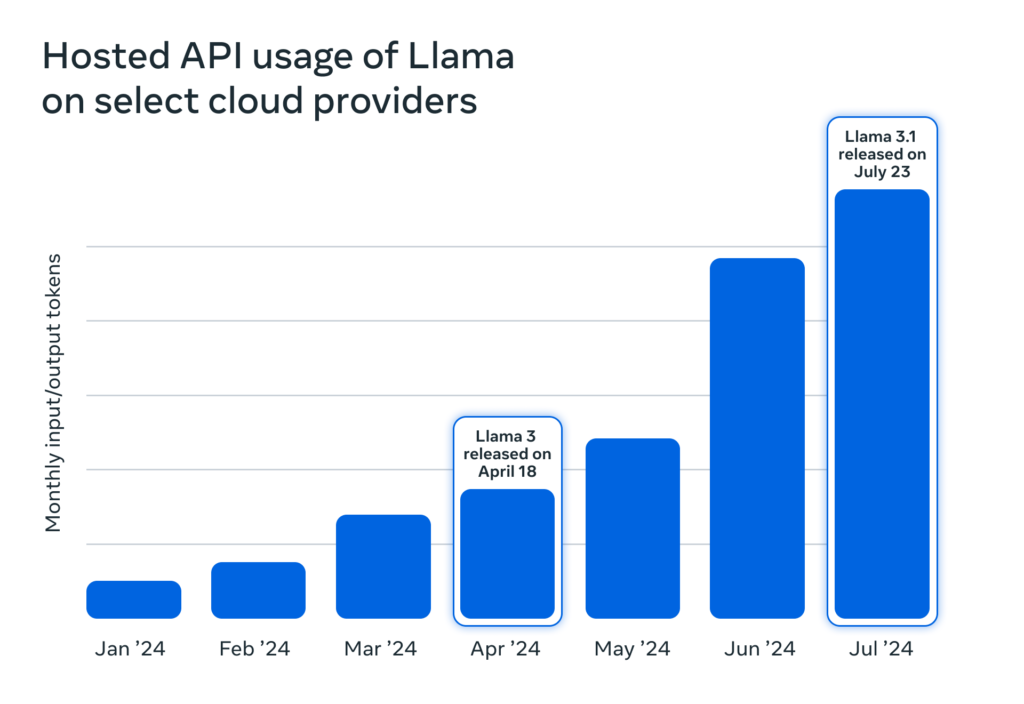

Llama 3.2, the latest iteration in the LLaMA (Large Language Model Meta AI) series, is making waves in the AI world. Building upon the success of its predecessors, LLaMA 1 and LLaMA 2, this model brings several advancements in natural language processing (NLP) and machine learning efficiency. But how does it really compare to other models, and what’s new in this version? In this comprehensive review, we’ll dive deep into the features, performance, and real-world applications of Llama 3.2.

Read More

1. Evolution of the Llama Series

Origins of the Llama Series (LLaMA 1 and 2)

Before we get into the nitty-gritty of Llama 3.2, let’s quickly revisit its roots. Meta AI initially launched the LLaMA series as a lightweight alternative to other large language models like GPT-3. While the first iteration made waves for its efficiency, LLaMA 2 built upon it by offering better fine-tuning and increased language generation accuracy.

What’s New in Llama 3.2?

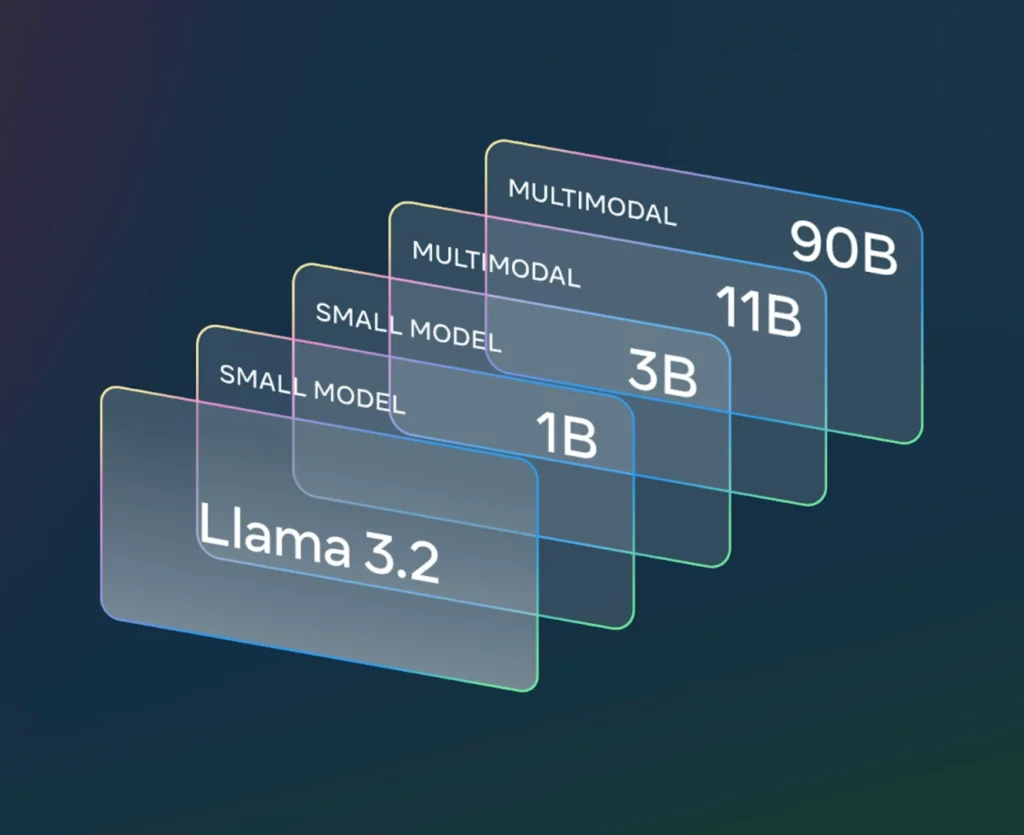

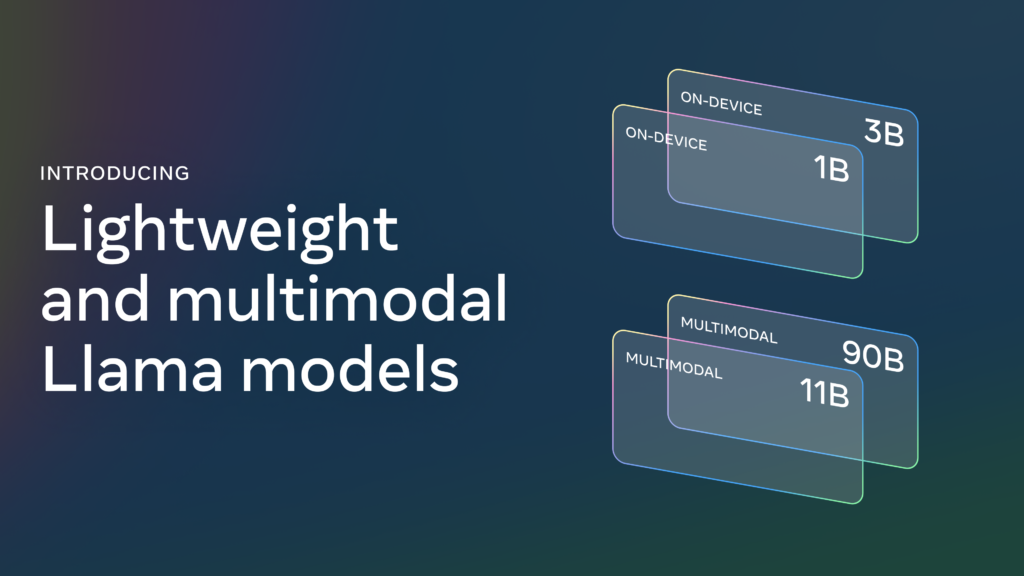

Llama 3.2 introduces several key upgrades, including larger training data sets, more robust algorithms, and a refined architecture for faster processing. These enhancements make it one of the most powerful yet resource-efficient models on the market today.

The Llama 3.2 1B and 3B models support context length of 128K tokens and are state-of-the-art in their class for on-device use cases like summarization, instruction following, and rewriting tasks running locally at the edge. These models are enabled on day one for Qualcomm and MediaTek hardware and optimized for Arm processors.(Meta Says)

2. Model Architecture

Size and Parameters of Llama 3.2

One of the standout features of Llama 3.2 is its sheer size. This version comes with an increased number of parameters, enabling it to handle more complex queries and deliver more nuanced outputs.

How Does It Compare to LLaMA 2?

Llama 3.2 uses a more advanced transformer architecture, making it faster and more accurate. The model achieves this through optimized attention mechanisms, reducing computational overhead compared to LLaMA 2.

3. Training Data and Techniques

Data Sources Used in Llama 3.2

Llama 3.2 was trained on a much broader set of data, including high-quality text from academic research papers, books, websites, and specialized data domains. This diverse dataset helps the model generalize better across different industries.

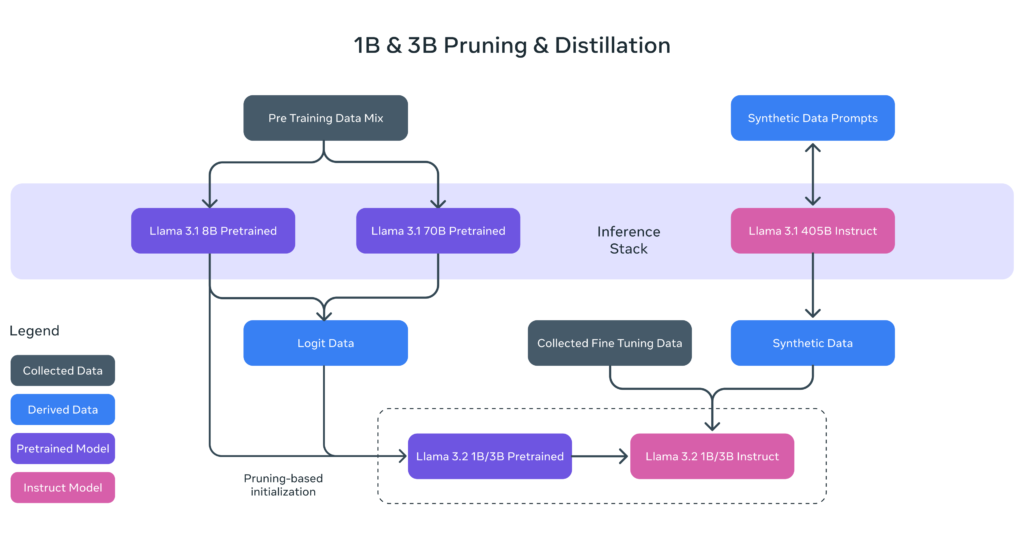

New Training Techniques in Llama 3.2

With Llama 3.2, Meta AI introduced a more efficient pre-training technique. This method optimizes the time it takes to train the model, leading to faster deployment without sacrificing performance.

4. Performance and Accuracy

Benchmarks and Metrics

On standard NLP benchmarks, Llama 3.2 outperforms its predecessors. It ranks highly on the GLUE benchmark for language understanding and the SuperGLUE benchmark for more complex reasoning tasks. Its accuracy in text prediction and natural language generation also shows significant improvement.

Performance in NLP Tasks

Whether it’s summarization, translation, or question answering, Llama 3.2 excels across a wide range of NLP tasks. Its language generation capabilities are more coherent, providing responses that are closer to human-like conversation.

5. Efficiency and Speed

Computational Efficiency

Llama 3.2 uses fewer computational resources compared to other large models like GPT-4. It’s optimized to run on less powerful hardware while still maintaining impressive performance, making it suitable for businesses with smaller data centers.

Inference Latency and Responsiveness

In terms of inference latency, Llama 3.2 is incredibly responsive, even in real-time applications. Its low-latency performance makes it a strong contender for industries needing quick, real-time processing.

ALSO READ; 11 Powerful ChatGPT Prompts to Supercharge Your Productivity

6. Adaptability and Fine-Tuning

Fine-Tuning Capabilities of Llama 3.2

Llama 3.2 is easy to fine-tune for specific tasks. Whether you’re working on chatbot applications, recommendation engines, or content generation, the model’s adaptability allows for smooth customization.

Pre-trained vs Fine-tuned: Which is Better for Your Needs?

The pre-trained version of Llama 3.2 is highly capable, but fine-tuning it for specific domains (like legal text, medical reports, or technical documentation) brings out its best. Depending on your use case, fine-tuning can dramatically improve performance in niche areas.

7. Multimodal Capabilities

Can Llama 3.2 Handle Images, Audio, or Video?

While Llama 3.2 focuses on text-based tasks, Meta AI has hinted at its potential for multimodal applications in future versions. Right now, Llama 3.2 does not natively support image, audio, or video inputs, but can be extended with external frameworks for such tasks.

8. Compatibility with Other AI Tools

Integration with Popular Frameworks

Llama 3.2 seamlessly integrates with popular AI frameworks like PyTorch and TensorFlow, making it accessible to developers across the globe. Whether you’re running it locally or in the cloud, integration is simple and efficient.

Third-Party Tools for Enhanced Functionality

Several third-party tools like Hugging Face Transformers support Llama 3.2, giving developers access to enhanced functionality such as API integration, pre-trained models, and easy deployment options.

9. Ethical Considerations

Bias and Fairness in Llama 3.2

Like any large language model, Llama 3.2 is not immune to bias. However, Meta AI has taken significant steps to reduce biases by incorporating diverse datasets and monitoring the model’s output for fairness.

Privacy and Security Concerns

Privacy is a top priority for Llama 3.2, especially when handling sensitive data. The model adheres to strict data privacy guidelines, ensuring secure processing and output without risking data leaks.

Red teaming

Using both human and AI-enabled red teaming, we seek to understand how our models perform against different types of adversarial actors and activities. We partner with subject matter experts in critical risk areas and have also assembled a team of experts from a variety of backgrounds. Our red-teaming efforts incorporate experts across various disciplines, including cybersecurity, adversarial machine learning, and responsible AI, in addition to multilingual content specialists with backgrounds in AI security and safety in specific geographic markets. (Meta Says)

10. Real-World Applications

Use Cases in Business

From customer support chatbots to AI-driven research assistants, Llama 3.2 has numerous applications in the business world. Companies are using it to automate tasks, improve content creation, and streamline operations.

Impact on Research and Academia

Llama 3.2’s ability to generate complex, coherent text makes it a valuable tool for academic researchers, particularly in areas requiring large-scale text analysis and synthesis.

11. Availability and Pricing

Open-Source Availability

Llama 3.2, like its predecessors, is expected to maintain an open-source license, making it accessible to developers and researchers at no cost. This aligns with Meta’s mission of making AI development more democratic.

Pricing for Commercial Use

For commercial applications, licensing fees may apply, depending on the scale and nature of the use. Businesses interested in deploying Llama 3.2 at scale will need to consider these costs.

12. Model Customization and Extensibility

How Easily Can Llama 3.2 Be Extended?

The architecture of Llama 3.2 is designed to be modular, meaning developers can easily add new layers or integrate it with other AI models for specific tasks.

Plugin and Extension Support

It supports plugins and can be extended with APIs to enhance its capabilities, making it highly flexible for both developers and businesses.

13. User Community and Support

Developer Community Around Llama 3.2

The developer community surrounding Llama 3.2 is growing rapidly, with forums, GitHub repositories, and third-party support popping up to help users maximize the model’s potential.

Available Documentation and Tutorials

Llama 3.2 comes with extensive documentation, tutorials, and guides, making it easy for even beginners to get started with the model.

14. Comparisons with Other AI Models

Llama 3.2 vs GPT-4

While both Llama 3.2 and GPT-4 are powerful, Llama 3.2 is optimized for computational efficiency and open-source use, making it a great alternative for those who need a lightweight, customizable model.

Llama 3.2 vs Other Transformer Models

Compared to other transformer models like BERT and T5, Llama 3.2 stands out for its faster processing speed and greater adaptability to fine-tuning, especially for industry-specific applications.

15. Future of Llama 3.x Series

Predicted Advancements in Future Versions

Llama 3.2 is likely just the beginning. Future versions could see improvements in multimodal capabilities, better bias handling, and enhanced fine-tuning options.

How Llama 3.2 Sets the Stage for Next-Gen AI

With its efficient architecture and open-source accessibility, Llama 3.2 is setting the standard for next-generation AI, pushing the boundaries of what’s possible in language models.

Conclusion

Llama 3.2 represents a major leap forward in AI language models, offering both performance and flexibility. Whether you’re a business looking to streamline operations or a developer seeking an open-source solution, Llama 3.2 is worth exploring. Its computational efficiency, easy customization, and strong community support make it a standout choice in the evolving world of AI.

FAQs

Is Llama 3.2 suitable for small businesses?

Yes, its efficient architecture allows it to run on less powerful hardware, making it accessible for small to medium businesses.

What makes Llama 3.2 different from LLaMA 2?

Llama 3.2 offers better performance, larger datasets, and more efficient algorithms than LLaMA 2.

Can Llama 3.2 be used for multimodal AI tasks?

Currently, Llama 3.2 focuses on text-based tasks, but it can be integrated with external frameworks for multimodal applications.

How can I fine-tune Llama 3.2 for my specific needs?

Llama 3.2 offers flexible fine-tuning capabilities, allowing you to adjust the model for domain-specific tasks.

What are the hardware requirements for running Llama 3.2?

Llama 3.2 is designed to be resource-efficient, but you’ll still need a GPU or cloud-based solutions for optimal performance.